picture for the htbd presentation

parent

dc616e5a

No related branches found

No related tags found

Showing

- glucose_ts/data/tf_data_windows.py 6 additions, 4 deletionsglucose_ts/data/tf_data_windows.py

- glucose_ts/models/lstm.py 8 additions, 20 deletionsglucose_ts/models/lstm.py

- glucose_ts/models/utils.py 0 additions, 2 deletionsglucose_ts/models/utils.py

- presentations/htbd_presentation.pdf 0 additions, 0 deletionspresentations/htbd_presentation.pdf

- presentations/htbd_presentation.tex 2 additions, 3 deletionspresentations/htbd_presentation.tex

- presentations/images/cell_parameter.png 0 additions, 0 deletionspresentations/images/cell_parameter.png

- presentations/images/lstm_examples.png 0 additions, 0 deletionspresentations/images/lstm_examples.png

- presentations/images/microscopic_cells.jpg 0 additions, 0 deletionspresentations/images/microscopic_cells.jpg

- presentations/images/microscopic_images.png 0 additions, 0 deletionspresentations/images/microscopic_images.png

- presentations/images/old_new_image.png 0 additions, 0 deletionspresentations/images/old_new_image.png

- presentations/images/raman_sample.png 0 additions, 0 deletionspresentations/images/raman_sample.png

- presentations/images/residuals.png 0 additions, 0 deletionspresentations/images/residuals.png

- presentations/images/sphinx_docu.png 0 additions, 0 deletionspresentations/images/sphinx_docu.png

- presentations/images/time_series_dataset.png 0 additions, 0 deletionspresentations/images/time_series_dataset.png

- tests/data/test_tf_data_windows.py 4 additions, 4 deletionstests/data/test_tf_data_windows.py

No preview for this file type

presentations/images/cell_parameter.png

0 → 100644

163 KiB

presentations/images/lstm_examples.png

0 → 100644

146 KiB

presentations/images/microscopic_cells.jpg

0 → 100644

4.51 KiB

presentations/images/microscopic_images.png

0 → 100644

159 KiB

presentations/images/old_new_image.png

0 → 100644

169 KiB

presentations/images/raman_sample.png

0 → 100644

83.1 KiB

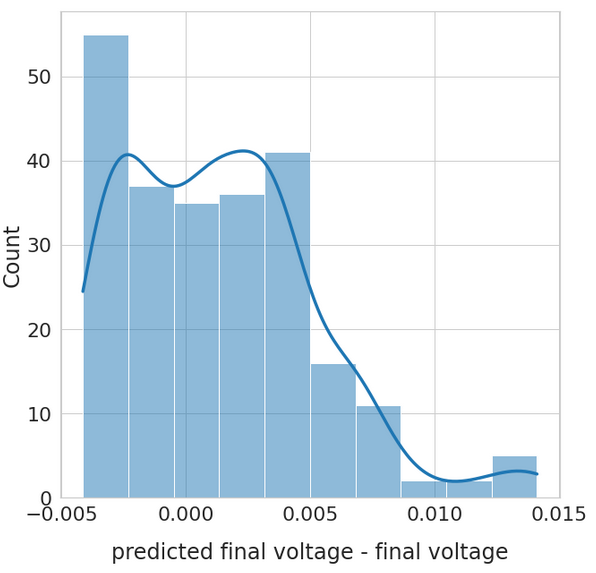

presentations/images/residuals.png

0 → 100644

59.5 KiB

presentations/images/sphinx_docu.png

0 → 100644

185 KiB

presentations/images/time_series_dataset.png

0 → 100644

275 KiB